This talk was held in February 2022 at the ATX West Annual Exhibition of automation technology in Anaheim, California.

Below is a transcript of Mr. Nicholson’s presentation, whereby technical information about Symphoni is provided. This Use Case Study addressed a client’s request: To see if there was a faster and more efficient way to assemble their autoinjectors. They insisted that their core processes be respected while meeting their demand for speed, flexibility, and high-end performance. All while running two different autoinjectors on the same machine.

![]() Ken Nicholson, R&D Director

Ken Nicholson, R&D Director

00:07:00 | We’re going to start with an overview of the technology and some of the challenges that we needed to solve to be able to achieve this. What we needed to do was have very, very high levels of efficiency so that we could replicate standard hardware across many different common pieces to build an overall system, and be able to economically justify this and still have a value proposition.

00:32:19 | This first video is showing you a number of different instances of the Symphoni technology at play. We’ve got some real-time video and then we’re showing some high-speed video. The reason we do this is when people see one of our systems run, it’s a blur. The first thing that they assumed is that we’re sacrificing either the process itself or being violent with the components. We put things on high-speed cameras to show that these motions are very calm. They are, in fact, very smooth and targeted. We’re coming in at very high speed, slowing down for core process like insertion and then going to 8gs to get back over the slowdown as a targeted approach to do the pick.

01:25:07 | Let’s go back to the beginning. Custom Automation generally broke into three types of technology. You have Indexing Motion, which is in the middle in the sense that it does reasonable rates. It is somewhat flexible, but it stays in the middle of productivity versus flexibility versus input. Pallets and Robots is incredibly flexible but painfully slow. You’re generally talking about how many seconds to make a part. Whereas if you use a Continuous Motion technology, it’s how many parts per second. You can get very high levels of productivity with Continuous Motion, but it’s also very part specific. It’s very sensitive to the component itself. It’s not flexible at all. Retooling a continuous motion machine is 75% of the cost of a new machine. These categories and barriers irritate us, so we look for ways to blur the lines.

02:38:10 | One of our customers challenged us and said, “Look, Continuous Motion hasn’t changed in decades. Can you change the paradigm of what’s done with Continuous Motion but reduce the tooling and add more flexibility?” What came out of it is the journey that we went on, going through five generations of the Symphoni platform, culminating in 61 claims on multiple patents across four continents.

03:08:21 | This is a video of a demo machine that is at the show. You can see Pallets and Robots running at Continuous Motion rates of 280 parts a minute with two tools. In the first station we’re feeding in parts at random reorientation. We are taking images with vision, passing them to two orientation servos that rotate infinitely. We pick up the parts, align them and load them to the pallet. This unload is showing a three-stop profile where we’re coming down and picking up the parts from the pallet. We’re lifting up, coming down over a reject bin where we could let go if we wanted to reject. We then go to a third position to the unload disc to recirculate the parts. What we’re doing on the demo machine is constructing and deconstructing a product just to show Symphoni’s core technology. This head here is doing right side up, upside down motions, and it’s making a mess of the parts on purpose so that when we send them downstream, we use vision to figure out where they are and do a correction. At this station, you can see this little panel that keeps showing up after we do orientation, because again, we’re taking pictures of vision, passing information and directly on the palette

04:28:22 | This station is taking pictures, figuring out of the parts right side up or upside down, and correcting it. At these rates and motions people assume that we’re being violent with the product, and that’s where we’re getting the time. But that’s not where Symphony gets the timing and the efficiency. It is a culmination of many different factors. It’s not any one thing. It’s the summation of a whole bunch of different little pieces that we’re adding up to make a big number. One of the things about the system is true multicore processing. We have a lot of respect for the mass and distance.

05:23:07 | Each station that you see here, each pick-n-place (RSM Arm) that’s doing the manipulation, has a dedicated controller. We’re doing all the motion planning, all the input camming, all the triggering, the reading of IO, all the division work in 800 microseconds so that we can be very, very efficient, focused on the task at hand. We respect the mass and distance. 05:45:18 I have another system here. All the pieces from one system to the next, they’re all the same. There are different end effectors on the pick-n-places, depending on what we’re doing. This is an O-ring installation, for example, on the previous system. We’re doing radial orientation, part inversion.

06:09:02 | This is a manufacturing of a syringe plunger. But all those pick-n-places from the bottom up, they’re all same core pieces. They’re all running the same software. It’s localized to a dedicated processor. The true multi-core processing means we have a controller for each one of those stations. With mass and distance, if you look at what we’re doing in each station, we’re not moving very far. One of the reasons that off-the-shelf robotics cannot achieve our rates is they are general purpose. They’re trying to cover too much territory to increase the number of applications they can be used for. Servos only care about mass and distance.

06:51:13 | We’ve limited the stroke on these guys to plus or minus 150 millimeters. Period. When you see the arms themselves, a lot of material is moved. It’s a finite analysis, studying and analyzing, to make sure we have enough structure to do the work. But we’re not going any further distance or carrying any more mass than necessary. We’ve got a dedicated controller doing all the work. On top of that it’s all synchronously cammed. Everything that we’re doing is in anticipation as a pallet comes into the station. We’re not waiting for it to get there. Because this is synchronously cammed we can guarantee where every servo is at all points. Every millisecond from start to finish is monitored and if any servo gets out of position, we have a very tight position following.

07:40:13 | If any of those servos get out of position, the system will take care of itself and shutdown, which means we don’t have to wait for the pallet to get there before we actually commit. We also have offline simulation tools where, within our motion planning, when we figure out all these motions, the pallet is coming into the station as the pick-n-place is coming in to either pick a part or place a part or do some assembly work.

08:03:22 | Within our simulation tools that we do offline, we make sure that as a targeted approach, we’re making the most of that because we can guarantee that the pieces are going to be where they’re supposed to be. It’s amazing how much time is available when you go through the simulation and do the optimization. The other element is the input compensation.

08:26:08 | On the previous video I showed, we were doing radio orientation. We have a part coming in, we have a camera where we’re taking pictures to determine the radial orientation. We know that when we trigger that camera, because we study it and characterize all the devices on the cell, it takes 17 milliseconds from the point that we trigger it. The camera takes a picture. We take the 17 milliseconds, put it into our operating system, which is our baseline software. It looks at how fast it’s going. Whatever the rate is, it takes us 17 milliseconds and converts into a distance. If you’re going very fast, it triggers early. If you’re going slower, it triggers late, and we get the 17 milliseconds back.

09:15:09 | We know that for the grippers, as with all the devices on a Symphoni system, they are characterized the same. It’s no longer a customer’s selection. This is the camera, this is the gripper, this is the offside welding, these are the pieces that are part of the system because we understand them. They’ve been characterized.

09:36:12 | All the output compensations are built in so that we can get all those little bits back for free. When a pallet shows up, for example, you have a pick-n-place coming in. Just as the pick-n-place gets there, the grippers close. Because we normally take 25 milliseconds to do that, we trigger that once again in advance based on the compensations going on. We give it a little bit of variability in the middle because we know the time. That allows us to get back a lot of free time that’s laying around. We can’t change physics, we can’t change the process. That’s something we’ve got a case study I’m going to show where we never take the time from the process.

10:17:11 | We do the core process the same as everybody else, but all the work before the value-add and all the work after the value-add, we get that out of the system so that we can spend most of our time being as efficient as we can doing the value-add work. To touch on these points: multicore processing is in each module, there is a dedicated processor so that we can do all the work up to 12 axes of motion locally, and do that every hundred microseconds.

10:48:16 | We slow down where we need to do the work, being very targeted, but then we go fast to get to the next spot, then slow down and do the next thing. We have ultimate respect for the physics. Servos only care about mass and distance, so it’s just as important in these Symphoni systems what it doesn’t do, what we’ve restricted, when we say, “too bad, if it’s bigger, you can’t do it. If it’s longer, you can’t do it here.” But if it’s within the volume and the operating characteristics for a configurable module that we have off-the-shelf, that we make in advance, then we can really optimize that after you’ve done the work, gets 6-8 g’s from there to the next spot. The synchronization I’ve already mentioned allows you to get the most out of the system and focus on stuff that you need to: the latency with input cammings and also the fact that all the devices are pre-characterized.

11:47:06 | I show this as something that you can’t normally do with automation. We can run this thing at 25 parts a minute, we can run it at 280, we can change it on the fly. Because of all that math going on in the background, with the input compensations and everything else lining up—the trigger of the cameras, the closing of the gripper fingers—if we’re doing force of position monitoring it aligns all that as well.

12:17:00 | It all comes up together, it all goes down together. It allows us to do the troubleshooting at the bottom end where you’re running slow. It allows us to also have the same characteristics and quality of running fast. If there are fixed-time processes, everything else will be cammed up to the point where something that has to go the same speed triggers and runs. It can run the same speed slow and at high speed. It would just look really funny because it’s creeping along and then all of a sudden it’s not the same rate. It allows us to optimize the system.

12:52:11 | Now getting into the Lego blocks, these are the bits and pieces that make up the Symphoni cell. At the bottom you can see we’re showing these one-meter (mid) modules. They’re the same, they’re prebuilt in advance at ATS. The same cabinet. Each one of those cabinets has its own controller, its own IO, its own servo drives. It takes care of everything inside that individual, one-meter (mid) module. It simply needs power, communications, and a master to follow. On top you can see all the different modules that we have surrounding the cell. Every one of our machines would have a Power Distribution End with a number of extension cables coming out of it with connectors on the end to plug into the back of those individual (mid) modules to provide power. And then the Control End, which drives the SuperTrak. SuperTrak is a linear conveyor and all servo-based. It does the overall master, it’s associated with all of our traceability because the pallets and all of our tracking everything is taken care of there. These individual (mid) modules can either run by themselves or you can plug them into one of these systems and they’ll follow along, geared to that master, but locally taking care of itself.

14:12:08 | One of these (mid) modules can run it by itself: same software, same hardware. You can do your validation, initial product development, and process development. As you get into clinical trials, you can connect the few together, start to increase your volume, the number of parts per minute that you’re getting out of it, and then those same modules running the same process, the same software, the same validated work for the same tools, could then be part of your end-of-line commercial high volume production unit.

14:53:03 | This is a very, very powerful tool. Even within ATS, when we’re quoting a job, up front we use the same tool to analyze customers URS and determine at a station level—each of those modules has our OS motored into it. There’s an external tool that we use to configure it. Within that external tool it does all the motion planning, it does all the output camming, all the events that are going to take place. That’s the tool that we use upfront in quoting so that we can determine if there’s enough time. We can’t change physics, so we have to determine millisecond by millisecond, engineered upfront, plan for the entire system, millisecond by millisecond: What’s this thing? Can it do it? Does it stay within the limits? What are accelerations? What are the capacities? Our design team will use the same tool, which will then go to the controls team and the integration team, and they will use that by clicking a transfer button to take what they built in the offline tool and shove it into the controller, which has our operating system built in.

16:13:05 | That operating system is the same in every one of these cabinets. The underlying code is not custom. We’re not writing code for each application. It’s also localized and dedicated to that one engine. It doesn’t really care what the rest of the system is doing. That one module that is focused on extremely efficiently executing a particular process by driving a number of servo axes and a number of IO, it only worries about itself. It runs as an independent machine. If you add other modules, it doesn’t affect what it’s doing. It is not a small piece of code in a bigger piece of code with sequence base functions that are looking at making decisions based on feedback as it works its way through the process. This is all planned in advance, millisecond by millisecond, up front using simulation tools and engineering, and then loaded into the same hardware physically and replicated around to build a system depending on how many modules in the end that you need.

17:20:08 | If you only need two modules to do work, okay, fine. If you need more, one of these systems can handle up to a total of 20 different stations, at which point you basically break it into a second system and connect them together (with conveyors or section of SuperTrak).

17:37:13 | In the previous slide was the same piece of the same hardware, same tools all the way up. What we’re finding is this is a very exciting, powerful option for companies where they can use the very same thing in the lab that they use for clinical trials instead of coming up with a different process for moderate productivity, for clinical trials, and that’s something completely different when you get to high volume.

18:01:11 | This brings us to our case study, where we have this great claim that I’ve made that we don’t sacrifice the process, we simply remove inefficiency before and after to buy us as much time in the real world as we can towards the things we can’t control. In automation, things you can’t control includes any given quality process, such as the insertion of a pre-filled syringe into an autoinjector body or feeding parts. If a part is coming into the front machine through an escapement, you can’t control the physics of that part, you can do some manipulation, but you’re quite limited.

18:55:21 | Symphoni focuses on the fact that I can’t really do anything about it, so I want to give that part as much time as I can to have very high reliability in bringing the part in and moving it through the system, which is one of our early patents. We’re able to do that and put, 60-80% of the overall cycle time to that area we can’t control, and then have the servos do what they are good at: SYNCHRONOUSLY, come to life, work their way up.

19:27:05 | I use amusement rides at theme parks as good examples for this kind of methodology. In a theme park when you get out of the ride, they’ll actually take you up to 17 meters per second. If you go on the ones where they’re trying to make you scream, to take your picture and get some money for it, they start you off slow, they build you up, and then all of a sudden you go and you really feel the accelerations and G-forces, right before they take the picture and then again, they calmly settle you down. That’s the same kind of motion planning that we do. It’s all fifth-order polynomial functions. It’s a very smooth transition to get going, and then you can really hit the gas at 6-8gs, getting where you got to go, then you settle down, come back, leaving the time to process but in between, you can get rid of as much as you can.

20:23:01 | In this case study we’re inserting prefilled syringe into an autoinjector body. We looked at doing a couple of different configurations of this, because Symphoni allows you to switch from one configuration to the next. It’s very flexible for running different kinds of products in addition to the standardized elements.

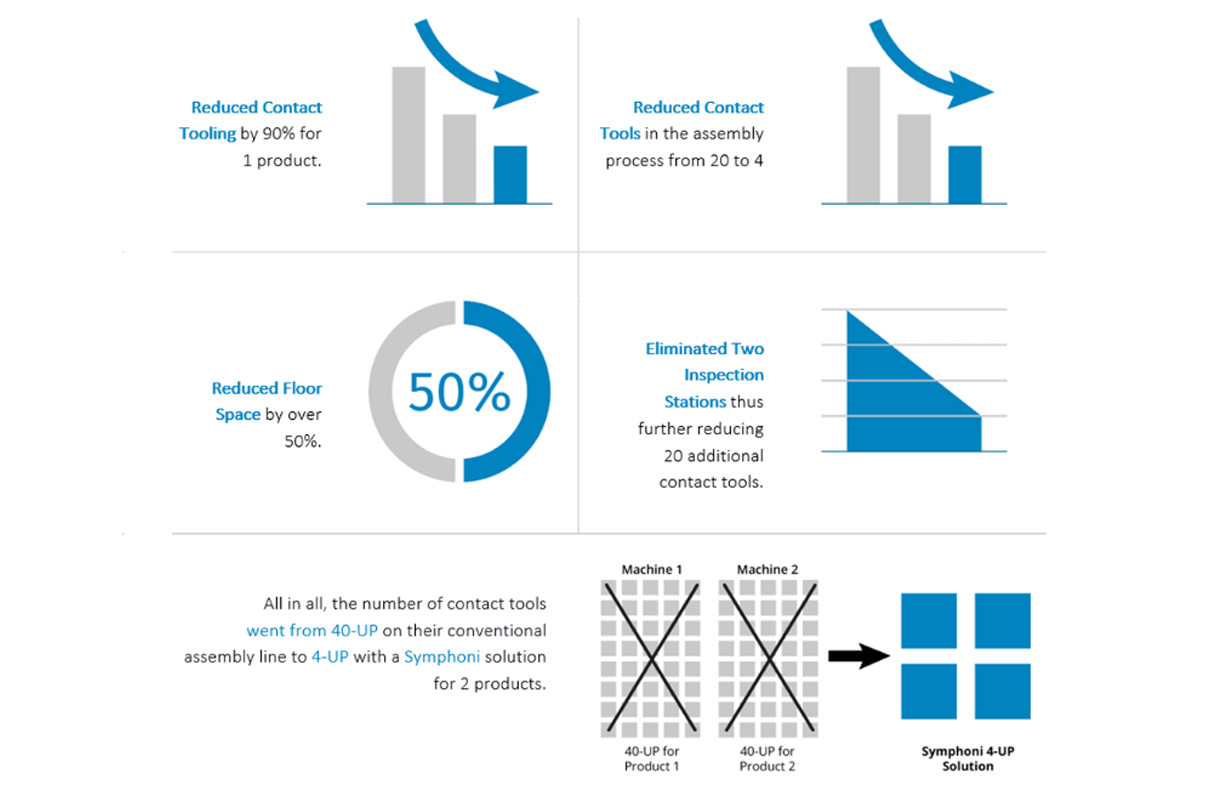

20:49:05 | This particular case, the client had an existing process with existing equipment running a 320 parts minute. They want to make sure we’re going to maintain all the same process quality they have, but can we get our overall cycle time low enough—750 milliseconds—so that we can reduce tooling. We used waveform signature analysis as a quality event, monitoring the process itself to also look at collapsing some of the pre-assembly and post-assembly quality stations into a single location.

21:23:22 | The existing machine has as a ten-up solution. So, you have 10 tools that’s doing the work. Because of the assembly station, it’s 10 tools handing off to 10 tools. There’s a quality station in advance for another 10, and a quality station after for yet another 10. That’s three stations, a total of 40 tools. We’re talking about getting down to four.

21:48:05 | Of course, they didn’t believe us. So we said, Let’s find out together that it’s not possible, because that’s pretty much everybody in my life: people explaining to me the stuff we can’t do. I said, we’ll take one of our modules and we’ll reconfigure it on one of our demo machines. You can come into our facility and do this together and let’s find out. Because if we’re wrong, fair enough. If we’re not, this could be very beneficial to you. To start with the process time itself, when we’re inserting the prefilled syringe into the autoinjector body, it’s completely controlled, completely regulated. The velocities, acceleration, and the forces throughout that entire operation are closely controlled. It’s something we wouldn’t touch, it’s very sacred. We leave it alone. This part of the cycle is where we slow down, making sure our following errors are tight, making sure that the motions meet the specification. We’re not doing that process any different than anybody else.

23:03:14 | When we look at the video of this operation, for Symphoni, it’s 500 milliseconds for us to do the value-add of the insertion, 500 milliseconds for the existing equipment that is on the floor. We’re not doing that any different. The big difference is in the second line where they’re wasting 800 milliseconds before and after to do 500 milliseconds of value-add.

23:28:16 The Symphoni cell can do the same work and waste 250 milliseconds, and it’s because of all those little bits and pieces I mentioned. I commonly refer to it as an inverse function of a death of a thousand cuts. We’re adding up 5 milliseconds over here, 25 over there. It comes to a big number. We end up with a much more efficient process.

23:51:06 | This is a proof of principle where in real time you can see us doing the insertion work. The first assumption that everybody makes is that’s got to be pretty violent. We must be hurting the components themselves. This is a high-speed camera showing that full rate taking place, where we picked up the prefilled syringe, we come up and align, synchronized with a vertical axis, open the grippers, move back on the fly, continue the operation of installing the prefilled syringe. The whole time we’re monitoring force, monitoring position, and then as soon as we’re done getting out of the way, over to get the next one, in order to reduce cycle time as much as possible.

24:39:22 | If you look at this on the high-speed camera: this is not violent, this is calm. It’s simply targeted. We are very selective of where we’re doing all these functions. We can open up those grippers on the fly and know, because it’s synchronously cammed, that they’re going to open at the right time. This is not like sequence-based code where the event is dependent on monitoring something, making decisions, which means the amount of time it takes is constantly changing. If you run a sequence-based code, your overall cycle timing moves around like an accordion. Each of these operations is synchronous, meaning that the train is leaving the station. For any of the functions, whether it’s vision or any of the things that we’re doing, we build in enough time.

25:32:18 | Because we characterize it, study it, because all the devices on here are devices that we understand, we build that in. We know if we give a vision system a hundred milliseconds to do its thinking and processing, and another 20 milliseconds for buffer, we’re going to read that result in a window that’s synchronous and deterministic at the same point of the cycle every single time. If it does not give us the information, that train is leaving the station on time and it will simply reject the part. We don’t know, or even care why the vision system failed. If it does fail a couple of times, we’ll shut down to figure out what’s going on with the vision system, but that event happens at the same point every single cycle.

26:18:06 | It allows us to do this synchronous time together, this pick-n-place, and it goes to the top. We’re holding the inside body of the prefilled syringe, we’re coming down with a gripper, and we can tie those together as the engine opens those grippers on the fly. It’s all very tightly regulated. While it’s taking place we have waveform analysis so we can do a signature analysis of the process. This determines if it’s a good product, but it also can detect some of those quality criteria that I mentioned earlier. Some of the events that they were looking for in a quality station in advance of the assembly, we were able to pick up based on the waveform at the station because it’s got enough fidelity in what it’s doing because we’re going very slow, very calm.

27:14:09 | We got all the information throughout the entire installation of the syringe. We were able to take the quality station requirements, in advance and after the fact, and compressed them all into a single location. We’re looking at a number of different tools, we’re looking at a number of different pieces. But we were able to replicate this.

27:37:03 | The customer came over; they took over the machine and started running it themselves. They made all their product failure modes; they ran it through and picked them all up. In the end, they agreed with us. When they could see and test it, we were able to bring the 40 tools down to four and still achieve output of the 320 parts per minute, as this high-volume solution in a continuous motion, but with the flexibility of the pallets and robotics on a configurable, modular solution, that we can basically build up using the same pieces as we need.

28:17:21 | This case study was very successful: 90% less tooling, using 1 station instead of 3, using 4 tools instead of 40, reduction in very expensive floor space. A very happy success with the customer all the way around.